Full-reference Video Quality Assessment for User Generated Content Transcoding

1University of Bristol

2Tencent (US)

Abstract

Unlike video coding for professional content, the delivery pipeline of User Generated Content (UGC) involves transcoding where unpristine reference content needs to be compressed repeatedly. In this work, we observe that existing full-/no-reference quality metrics fail to accurately predict the perceptual quality difference between transcoded UGC content and the corresponding unpristine references. Therefore, they are unsuited for guiding the rate-distortion optimisation process in the transcoding process.

In this context, we propose a bespoke full-reference deep video quality metric for UGC transcoding. The proposed method features a transcoding-specific weakly supervised training strategy employing a quality ranking-based Siamese structure. The proposed method is evaluated on the YouTube-UGC VP9 subset and the LIVE-Wild database, demonstrating state-of-the-art performance compared to existing VQA methods.

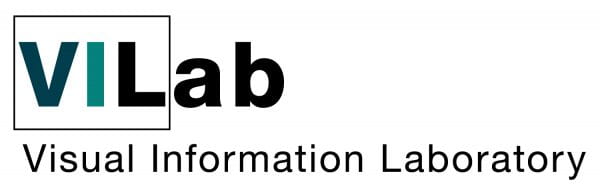

Generation of FR-UGC training data

(A) Training datasets is generated in two steps to simulate the UGC delivery pipeline. First pristine source sequences are compressed by H.264 to obtain distorted reference. Then, the distorted reference is further compressed by 4 popular codecs into 12 transcoded variants. This results in 9,288 transcoded sequences.

(B) Proxy quality metric (VMAF) is used to generate reliable quality labels for the training content. We always use pristine content as references in the VMAF calculations, the accuracy of the quality prediction has been maintained.

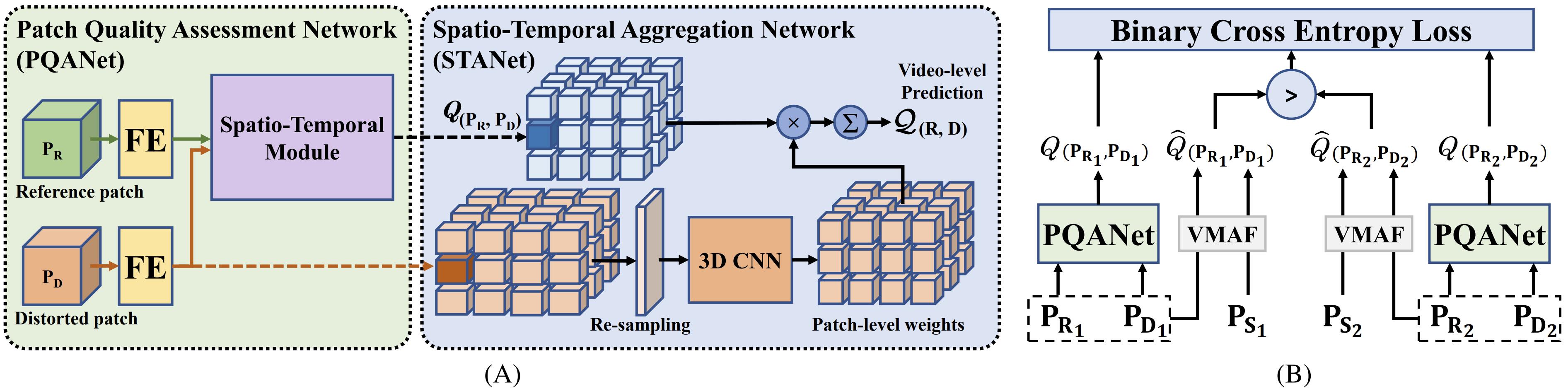

Model and Loss function

(A) The overall picture of the inference workflow.

(B) The illustration of the proposed training strategy.

Useful Links

[DOWNLOAD] code repos and training data

Citation

@inproceedings{qi2024full,

title={Full-reference video quality assessment for user generated content transcoding},

author={Qi, Zihao and Feng, Chen and Danier, Duolikun and Zhang, Fan and Xu, Xiaozhong and Liu, Shan and Bull, David},

booktitle={2024 Picture Coding Symposium (PCS)},

pages={1--5},

year={2024},

organization={IEEE}

}[paper]